An earlier post referred to using Lynx as a text browser to surf the internet.

Here is one another reason due to which Lynx is awesome, it can directly browse a specified website address and download the entire page as a text file. It is very simple to use Lynx to download a web page as text and list all the web links present on the page.

To do this :

1. Open Terminal and type lynx -dump [web address] > webpagename.txt

In the above example, the command is used to go to www.gnu.org and save the page as a text file named gnu-as-text.txt

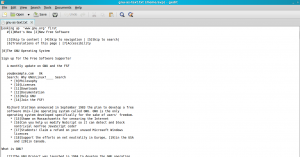

The saved text based webpage looks like this :

By the way, the same can be done by using wget or curl commands but this was just one of the uses of lynx as a purely text based browser.

Cheers.